Learning Objectives At A Deeper Level

While you’re likely aware that learning analytics can help improve the effectiveness of your training solutions, you also know that for learning analytics to be effective, you need the right data. Moreover, data is only as good as the questions asked and as valuable as the decisions it enables.

What Are The Top Challenges To Enabling Learning Analytics?

Here are the top 2 challenges faced by L&D managers in enabling learning analytics:

- Maintaining alignment between the learning objective(s), practice activities, assessments, and analytics.

- Enabling data capture.

Examples Of Learning Objectives And Alignment With Learning Analytics

Do you know if the learning objectives in your online training solution are tracked and enabled for learning analytics? If you’ve answered no, you aren’t alone. A survey by Harvard Business Review Analytics Services of business leaders reveals that most organizations are not prepared for a data-driven future [1]. 32% reported their organization’s learning analytics maturity was relegated to static, standardized reports. To explain this further, consider three different examples of learning objectives, each on a different level in Bloom’s Taxonomy as shown in the illustration below. If you had to enable learning analytics to track each of these learning objectives, each would require a different set of data that would need to be captured.

| Knowledge Level | Application Level | Evaluation Level |

| Given a product illustration, label the core components. | Sequence the steps for product assembly. | Given a customer scenario and a checklist of criteria, critique the technique for asking discovery questions. |

Remember that for each of these learning objectives (at different Bloom’s levels), you can implement learning analytics at different levels as well.

What Does The Current Analytics Landscape Look Like?

Currently, many organizations are only focused on capturing the passing score and the course completion status. But sadly, this does not do much to provide learning insights that can be used to improve the training solution.

Going Deep With Learning Objectives

If you have to transition from simple tracking of course status to in-depth tracking of learning objectives with learning analytics, descriptive learning analytics would be a good level with which to begin. How do you enable descriptive analytics and what information can you track? Let’s go back to the learning objective at the knowledge level—given a product illustration, label the core components, to see what kind of data we can gather by going deeper with descriptive analytics. In the table below, notice how the descriptive questions asked will yield valuable insights.

Learning Objective: Given a product illustration, label the core components.

Descriptive Analytics = what happened?

Today’s Common Analytics

|

Deeper Analytics (yield more insights)

|

If you’ve taken care of aligning assessments with learning objectives, the next task is to structure the learning activities and the assessment to capture what’s going on. For example, in the activity that requires learners to label core components, if we’ve provided a hint button, then data on learners who click the hint button needs to be captured.

3 Factors To Ensure You Go Deep, The Right Way

Here are the factors to keep in mind when you’re focusing on learning analytics for deeper tracking of learning objectives.

i. What Are The Design Considerations For The Online Activity / Assessment?

To enable learning analytics for the learning activity that’s aligned with the learning objective of the course, keep in mind the following design considerations:

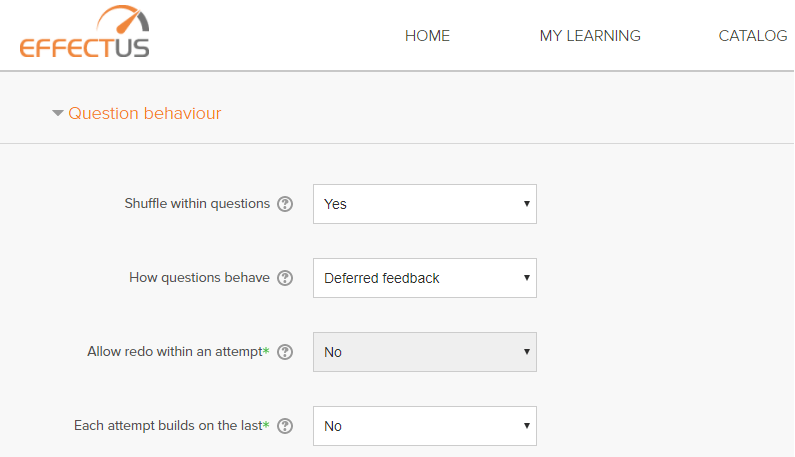

Quiz Engine Capabilities

A good Learning Management System (LMS) has a built-in quiz engine that allows you to shuffle assessment questions, randomly select from a question pool, restrict attempts on activities, and so on. You can also add timers that can force learners to complete an activity or an assessment within a stipulated time. Many eLearning software programs also offer robust quiz capabilities.

LMS Options To Shuffle Questions

Number Of Attempts

You can check the LMS report for the number of attempts to respond. Get it customized to include the learners who attempted the quiz or activity, the number of attempts, completion time for the activity, and the overall score. You also have the option of getting a detailed report on each attempt for each question (item analysis).

ii. What Are The Communication Protocols?

While SCORM 1.2 is the most common specification that’s followed on LMSs, SCORM 2004 has made considerable improvements in sequencing and navigation. With SCORM 2004, eLearning developers can specify rules around how learners can navigate between SCOs (shareable content objects). You can set up specific learning paths per learner. For example, you can restrict learners from attempting the assessment till they go through all the topics in the course or if a learner fails to complete an activity successfully, you can have him/her review related content again before reattempting the activity. You also have the more recent Experience API (xAPI) that allows tracking of learning experiences outside the LMS. With xAPI, you no longer have to restrict content to SCOs (shareable content objects). You can track any learning experience – be it offline, informal, or even on the job. While SCORM 1.2 will suffice if you need simple reports generated, SCORM 2004 or xAPI is more favorable when you need to enable learning analytics for in-depth analysis.

iii. What Are The LMS Considerations?

An LMS is at the heart of learning analytics implementation. Your LMS should be able to support the following functions:

- Generate reports in real-time.

- Provide insights to improve learning by capturing more detailed learning analytics.

- Send automated reminders for course completion.

- Allow customization of reports.

A Parting Note

Tracking learning objectives at a deeper level is definitely possible with learning analytics, provided your organization is willing to invest the time and effort required for learning analytics design and implementation. Download the eBook Leveraging Learning Analytics To Maximize Training Effectiveness - Practical Insights And Ideas for an incredible in-depth view of the topic. And for more insights on this, join the webinar and discover how to quickly plan for learning analytics to ensure utilization.

References: