Understanding How Digital Video Works

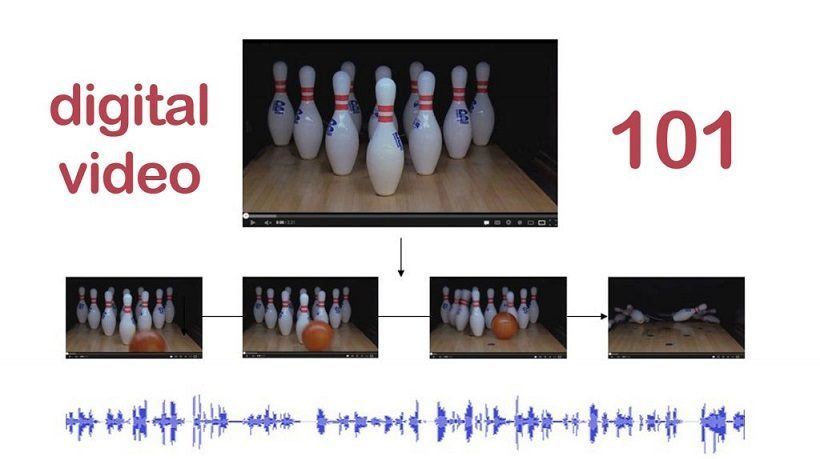

Digital video shows up on our screens like magic, but it’s conceptually the same as the simpler to understand motion pictures, invented over a century ago. Just like physical film and analog video, a digital video stream is made up of individual frames, each one representing a time slice of the scene. Films display 24 frames per second, and American video presents 30 frames in that same time span, known as the frame rate. The higher the number of frames in any given second, the smoother the video will appear. Digital video clips use frame rates from 12-30 frames per second, with 24 frames per second commonly used. The audio is stored as a separate stream, but kept in close synchronization with the video elements.

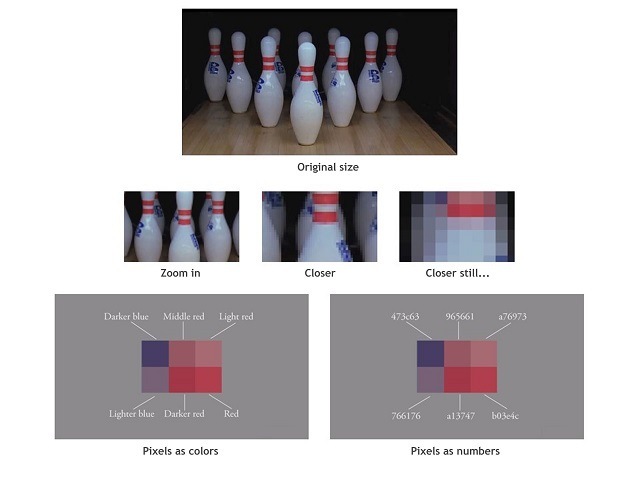

Like analog television, digital video uses a “divide and conquer” strategy. But in addition to dividing the image into a series of horizontal lines, each of those lines is further divided into a series of dots, called pixels and each dot’s intensity and the color is represented by a number. If we looked at a frame of digital video and zoomed into it, each of these discrete pixels would become easy to identify. We can visually identify each pixel according to its overall intensity and color, but that color can be easily represented by a number that uniquely identifies its overall value and is easier for the computer to manipulate and store.

We can thank the phone company’s work on their Picturephone™ video phone (think of the scene on the transport to the moon in Stanley Kubrick’s film, 2001: A Space Odyssey), as the catalyst for developing bitmap graphics. Picturephones first debuted at the New York World Fair in 1964 and promised to add video to everyday phone calls, but this never caught on. The first versions were essentially low-resolution traditional television systems, but ATT’s research arm, Bell Labs, having invented the transistor some years earlier and were at the forefront of developing graphical computers, wanted to make the image process more digital.

The amount of memory dedicated to the video display is what controls the perceived quality of the video. Representing the image in fewer dots, and therefore less memory creates a grainier and more pixelated look. This is akin to looking at a pointillist painting, which is made up of many finely placed brush strokes when viewed close up, but looks smooth when viewed from a distance. Early digital videos were small, typically 320 pixels across by 240 vertically. As memory became cheaper and computers faster, larger images were easily displayed with thousands of pixels across, affording the truly lifelike image quality we see on modern high–definition displays.

Unfortunately, the more pixels in an image, the more space it will take to store, and those numbers add up quickly. The low-resolution video at the top of the drawing above contains 240 lines of 320 pixels, requiring 76,800 pixels in all. Each pixel is made up of red, green, and blue values, each requiring 3 bytes, and totaling over 200 kilobytes to store [1]. But there isn’t just one frame in a video clip; there are 24 of them per second. Each second needs 5 megabytes to store and a minute requiring 332 megabytes. The scale of these number numbers becomes staggering at HD resolutions, with a minute of video taking a whopping 8 gigabytes to store a single minute [2].

These huge numbers presented a practical roadblock to using digital video and a number of mathematical techniques were tried to reduce the large amount of storage needed. In the end, the most efficient method came by looking at small chunks of the image and seeing if they were similar to other image chunks. As it turns out, there is a lot of similarity between frames in a video scene. The bulk of the change in any video stream is found in the foreground action, while the background typically remains the same. A 16 by 16-pixel block would require 768 bytes to store, but if it was referenced by a single number instead of spelling out each pixel in the block, the size could be reduced dramatically. The MPEG video standard does this (among a number of other tricks) to reduce HD video from 8 gigabytes per minute to a still large, but more manageable, 100-150 megabytes per minute. The audio portion of the clip is compressed using a variant of the MP3 compression used on popular online music sites.

Compressing individual frames is only part of the solution to practically deliver digital media via the computer. An overall framework is required to marshal the flow of the media data from the storage device to the screen and speakers. This framework is a software application, often bundled with the computer’s operating system, such as Apple’s QuickTime and Microsoft’s Video for Windows, which define a mechanism to wrap the individual streams into a single file and mediates its playback. Unfortunately, even if the underlying streams are compressed by using an industry-standard format such as MPEG, the streams are often incompatible with one another, requiring special software to be installed in order to play.

Excerpted From Sage on the Screen: Education, media, and how we learn by Bill Ferster. Johns Hopkins University Press, 2016.

Notes:

- 240 lines x 320 pixels / line x 3 bytes / pixel = 203,040 bytes / frame.

- 1024 lines x 1920 pixels / line x 3 bytes / pixel x 24 frames / second x 60 seconds / minute = 8,493,465,600 bytes / minute.