Think. Reflect. Plan. Iterate: Development With AI

The previous two articles in this series on developing code with Artificial Intelligence (AI) to enhance eLearning covered the initial architecture, setup, and code editing. This final piece is about best practices, an example, and lessons learned from six months of testing AI development tools.

Example Of Using AI Development Tools

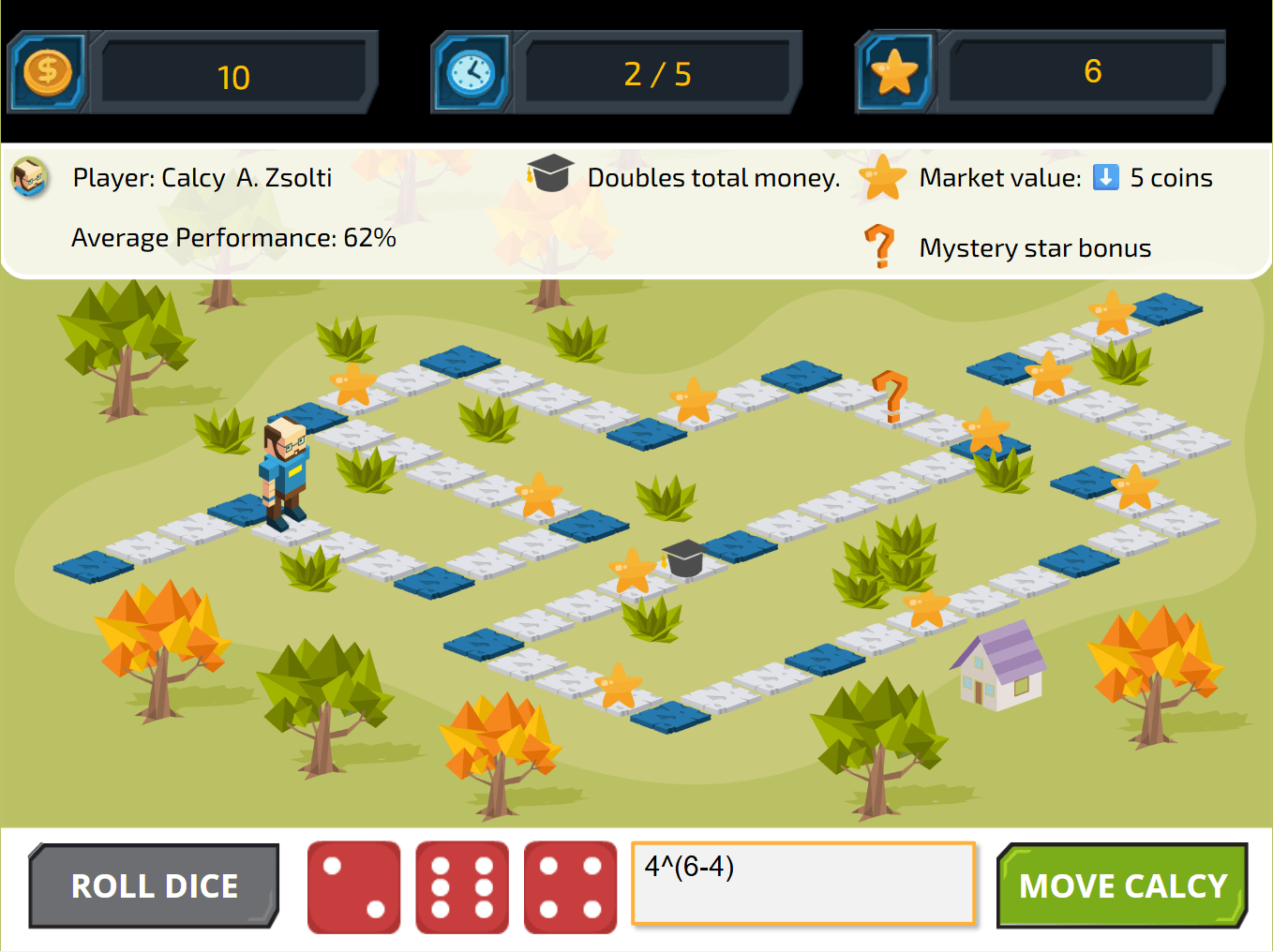

A couple of years ago, I built a prototype called Calcy. It was a gameful approach to learning how to use basic mathematical functions (+, -, *, ^) and the order of execution. Players get random dice numbers. With those numbers, they can build any valid expression, such as the one on the screen below: 4^(6-4). The app calculates the product (4 ^ 2 = 16), and moves Calcy by as many steps as the product.

The goal of the game is to collect points. The more numbers they use in the expression, the more points they earn. Landing a star adds additional points.

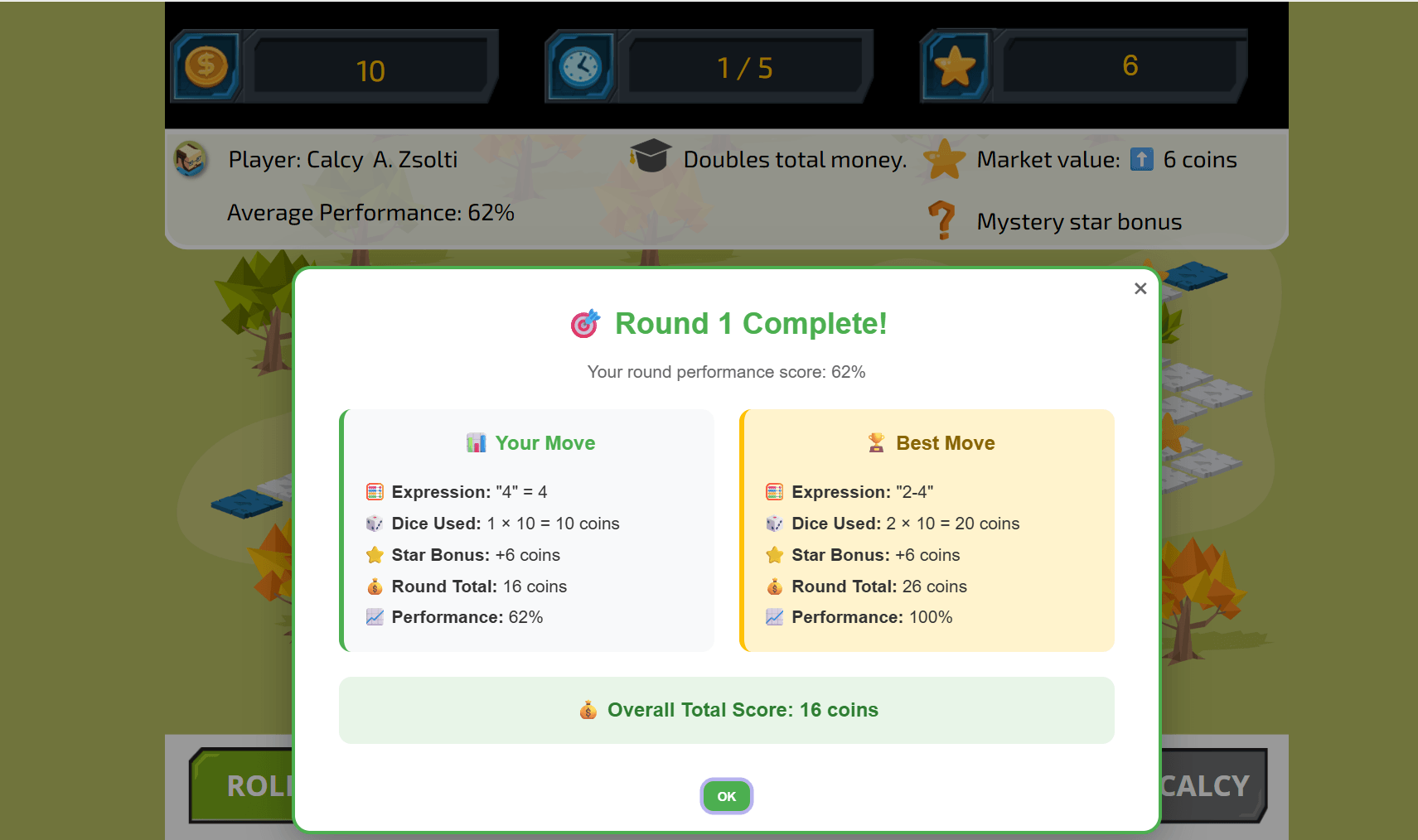

After each round, the app tells the player how they did compared to the best available move.

How I Made This

I built this using Storyline and JavaScript (also included available math libraries for the calculation part). It also had a database to compare your results with those of existing players.

- The challenge

Back when I built this, I had to use hacks and workarounds, and my own skills without AI. Storyline did not have the ability to identify objects easily, so I had to build hacks around that, too. It took months to get it working properly.

And so, I put AI to the test this year: "Can AI help me rebuild this experience without me touching any of the code?" Instead of months, it took four days in development with AI.

Do Not Start With Code

If you simply take the legacy code and give it to AI to rebuild (I've tested it), it becomes a total mess. If you simply describe what you want (the entire game flow) and instruct the AI to build it for Storyline integration (which I have also tested), it becomes a total mess. Do not start with vague instructions or code. Start with thinking!

Start With A Plan

Start with ChatGPT (or any other tool you don't have credit limits for), and ask it to help build a PRD.md (product requirements document for specific features) or README.md (for general projects) file for your particular AI dev tool. (I'll use Windsurf as an example because I ended up building most of the project using the Windsurf IDE.) The .md file is a markdown file that AI tools can read and understand easily.

This initial .md file served as the starting point of the project. It included all the features I would need, all the guidelines I had to set, all the critical information AI must always consider (for example, with Storyline, AI needs to consider what files are automatically rewritten with every publishing, so it won't touch those), and how the communication should work with Storyline.

I worked with ChatGPT through many iterations of this file until I thought everything was included (more about a mistake later). For several projects, it also suggested a file structure, naming conventions, and even some of the architecture elements.

Creating The Project File For Editing

Once I had this .md file, I published the legacy Storyline file locally. Inside that published file, I had a webobject folder where all the legacy JavaScript code for Calcy was. I included the exact location in the .md file and labelled them as legacy with instructions not to change, improve, or delete. It was there just for educational purposes.

Then, I opened this published file folder in Windsurf as a project and added the .md file in the webobject folder for guidance. This way, AI could "see" the full project, even if writing code was supposed to happen inside a webobject folder.

Initial Prompt

After all that preparation, I used the chat in Windsurf to explain we're about to completely rebuild this experience. It was strictly instructed to read the full .md file and analyze the current file structure. Finally, I repeated explicitly to break the to-do list down into small chunks. In other words, make a plan before doing anything.

It took about 20 seconds for AI to read the whole .md and understand the file structure. After that, it started creating a plan. It listed several components we need to work on and suggested an order to tackle them. It also made a "mental memory" (I like this feature in Windsurf a lot) of this plan and the progress.

While the "game" seems straightforward, it is actually a complex flow as it includes:

- Randomizing dice and displaying them on the screen.

- Allowing the player to enter any mathematical expression, but checking to make sure it's using only the dice numbers and only the allowed operations (+,-,*,^)

- Moving Calcy step-by-step to the location based on the product of the dice formula.

- Checking if Calcy landed on a star (extra points), graduation cap (double current points), or mystery (random reward)

- Showing Calcy's current move stats against the best available (figuring it out first)

- Saving score in the database with a leaderboard at the end.

There's a lot here, which I'll get into with more details in a separate article for developers, but for now, let's focus on a single element.

I told Windsurf to start with the most problematic one, moving Calcy. If we can't resolve that, the rest of the experience falls apart.

Single Feature Development

I instructed AI to keep updating the .md file as we make changes, so it is always the ground truth for the project. After I selected the feature to work on, Windsurf broke it down to a more detailed to-do list.

First, it suggested that we need to create a communication center that will serve as the single point for sending and receiving information from Storyline. This will simplify any later troubleshooting. It built that functionality out on its own.

Then, I had to explain how to locate Calcy on the screen, so AI could write a function to move it. This is in the documentation of Storyline (new feature since the legacy version). I also told Windsurf that Storyline now uses GSAP (animation JavaScript library) for animation. Windsurf researched how GSAP works and learned how to use it with Storyline on its own.

- Iteration 1

Then, we started the iterative process of making sure we can move Calcy. AI built a testing function just for that. It worked out of the box, Calcy was flashing on the screen, and it moved to a random point. Yay! Success! - Iteration 2

Now that we could move Calcy, we needed to move it on the gameboard. I explained to Windsurf that I have a series of hotspots on the screen (invisible for users) representing steps. Windsurf then created a "brain" functionality for the gameboard from initiating the steps, associating each with a hotspot, etc. Then, it created functions that could move Calcy from any of these steps forward one-by-one on the board. It looked like it worked! However, the more features you're adding, the more testing it requires. - Iteration 3

I tested the one-by-one step, and it worked all the way to the end of the gameboard. At that point, Calcy stopped. We needed to fix it so Calcy could do full circles, starting with step 1 again. It also turned out that AI forgot that Calcy can go backwards as well. And it should be continuous from step 1 to the last step if a negative number is submitted. Fixed! Or, as the Windsurf chat keeps putting it: "Perfect! I just implemented the step-by-step feature." - Iteration 4

Now that Calcy was moving, we also had to check if it landed on a star. This had many rounds of testing with hardcoded stars on specific steps because we had to make sure it only counted if Calcy landed on the step and not passed through the step. The star also had to be removed and points added when it happened.

These four iterations took the longest time of the total development time. The visual part of development with AI is difficult because it combines abstract logic, scoring, and visual changes on screen.

The Mistake

And then I made a mistake. Or, actually, I realized that I had made a mistake earlier by not including in the .md that I would like these stars on the gameboard to be randomized for each game. The stars were hardcoded. This functionality required a complete revamp of logic. I wanted to move quickly to the next significant component, so I just accepted the AI development solution as is. It was a mess. I had to revert to a previous version and restart. It turns out that making stars randomly appear and function properly was not an iteration. It was a feature in itself.

When An Iteration Becomes A Feature

Windsurf suggested it could create these stars on the fly automatically and place them on the screen on top of the steps. I tested it and it worked fine. Until I tested on mobile and realized that Storyline has its own layer of things, so when you resize the screen, these stars don't match with the steps anymore. AI kept going with fixing the problem its own way, like falling into a rabbit hole. I had to stop it.

Know when to stop! We ended up abandoning the approach and went back to the original one: creating the stars as images in Storyline, and then letting the code move them to the associated steps. AI said this was great idea and it got overwhelmed :)

After completing this feature, I opened a new chat and instructed Windsurf to study the .md file again before moving on to the next component. For this first project, I did not use GitHub for source control, and so I "played the role" of managing code by saving iterations in a safe location. After this project, I always used GitHub for development with AI.

Other Examples For Inspiration

Just for inspiration, here are some of the other projects I used to test AI development capabilities:

- A Storyline variable manager

You upload your .story file, and the app extracts all variables in a nice table with default, min, max, usage on screen or in JavaScript triggers, and you can add definitions to them. Share that file with other developers. - A team-based data literacy simulation

Where multiple teams make data literacy decisions, buy evidence to support their choice, and provide an explanation why they think a statement is false, true, or unknown. - A gamification profile creator

That displays your gamification profile with motivational factors. - An interactive tool to allow users to create their own Qualtrics survey

From a pool of approved questions. - Python automation scripts

To connect Qualtrics, Smartsheet, and a SQL database for data analysis. - xAPI code writer

What Did I Learn From These Projects?

These are best practices and guiding principles I've adapted from community best practices and own lessons learned [1]:

Plan First, Then Code

Start in chat mode to agree on an approach. Build a readme.md (smaller projects), project.md (more complex projects), or a prd.md (product requirement document for a specific product) file that contains the necessary information about the project. Only then switch to code mode.

Set Rules, But Don't Get Hung Up On Details

Windsurf and others let you create a global rule file that includes basic principles. Keep it concise and consistent. Without global rules, you may end up using different types of variable notation (snake_case vs. camelCase), which can lead to problematic assumptions by the code.

Small Files

Each file should have a single purpose. Aim for no more than 400-500 lines.

Always @mention Relevant Files When Requesting Changes

In the chat, tell AI which file you want to be touched by using the @ sign. If you don't, AI may look at the open files or search for the full structure.

Iterate, Iterate, Iterate

Do not go vague and wild: "add real-time messaging." Do one small change and test. Be explicit with AI about this approach. I often ran into this issue, where it wanted to change other parts of the code for "improvement." Even if it had nothing to do with the current problem.

Start A New Chat For A New Feature Or Issue

Don't mix features and bug fixes in a long single chat. The longer your chat context window, the more likely AI is to start getting confused.

Do Not Blindly Accept All Changes

Most UI indicates the number of additions and deletions AI has made. If nothing else, look at the deletions! I've found that sometimes, working on a problem for a long time, AI can decide to completely revamp and try a new approach without confirming. This can delete part of the functionality you originally relied on for later development.

Use The Base Model For Execution

In my very first experiment, I used the most expensive model (Claude 4.0) for everything (100%), and used up the credit for the month within a week. When you're doing simple execution or troubleshooting, start with the base model. Use paid ones when needed. Windsurf has a free SWE-1 agent that is pretty capable of doing simple work.

Inline Edits Are "Free"

Use the chat for large-scale or complex problems. When you just need to change a couple of lines or adjust CSS use the inline editing. Windsurf's prediction model is a mind reader. It literally knows what I'm trying to type. Not just for auto completion! It infers, based on the code change I made in one place, what I need to change in another place. And it doesn't cost you any credits.

AI Will Make Mistakes

Revert to the old version. In my experience, the first 0-80% of what you want is smooth sailing. But, when it comes to a bug or a mistake that AI accidentally made, be very careful! AI tends to go down a rabbit hole, fixing its own thing over and over, until neither you nor AI knows what's happening. If something is wrong, revert back to the older version of the code that worked, and start with a new prompt.

Use GitHub Or Other Tools

Unless you do quick, standalone snippets, I strongly recommend using a source code management system like GitHub. You can easily revert to older code, trying a feature in a new branch and only merging it with the main code if it works. If you decide not to use a source code system, do it manually for development with AI. Create a backup folder where you regularly save a version.

The most important part is not what tool or technology you select for development with AI. It is to start building, iterating, and evolving. There's always a better, easier, and faster way to do something, but your last imperfect build is much better than the perfect one you never made.

References:

[1] Planning Mode Vs. "Let's create an .md plan first"?

Image Credits:

- The images within the body of the article are created/supplied by the author.