AI Is Already Reshaping L&D From The Bottom Up

AI in L&D has crossed from experiment to expectation. 87% of teams already use it, and only 2% have no adoption plans. If you wait, you'll fall behind.

In this article, I'll show the evidence of this tipping point, what it means for your team, and the moves to make now. So you can scale with confidence.

About The Research

The insights in this article come from the AI in Learning & Development Report 2026, a global study conducted by Synthesia in partnership with Dr. Philippa Hardman. The research gathered 421 responses from L&D leaders, Instructional Designers, learning technologists, HR and talent teams, and Subject Matter Experts across North America, Europe, APAC, LATAM, and MEA.

Participants represented a wide range of industries—technology, education, consulting, manufacturing, healthcare, finance, government, and more—with a strong enterprise skew: nearly half work in organizations with 1000+ employees.

The Numbers Don't Lie: AI Adoption In L&D Is Now Mainstream, But Not From The Usual Sources

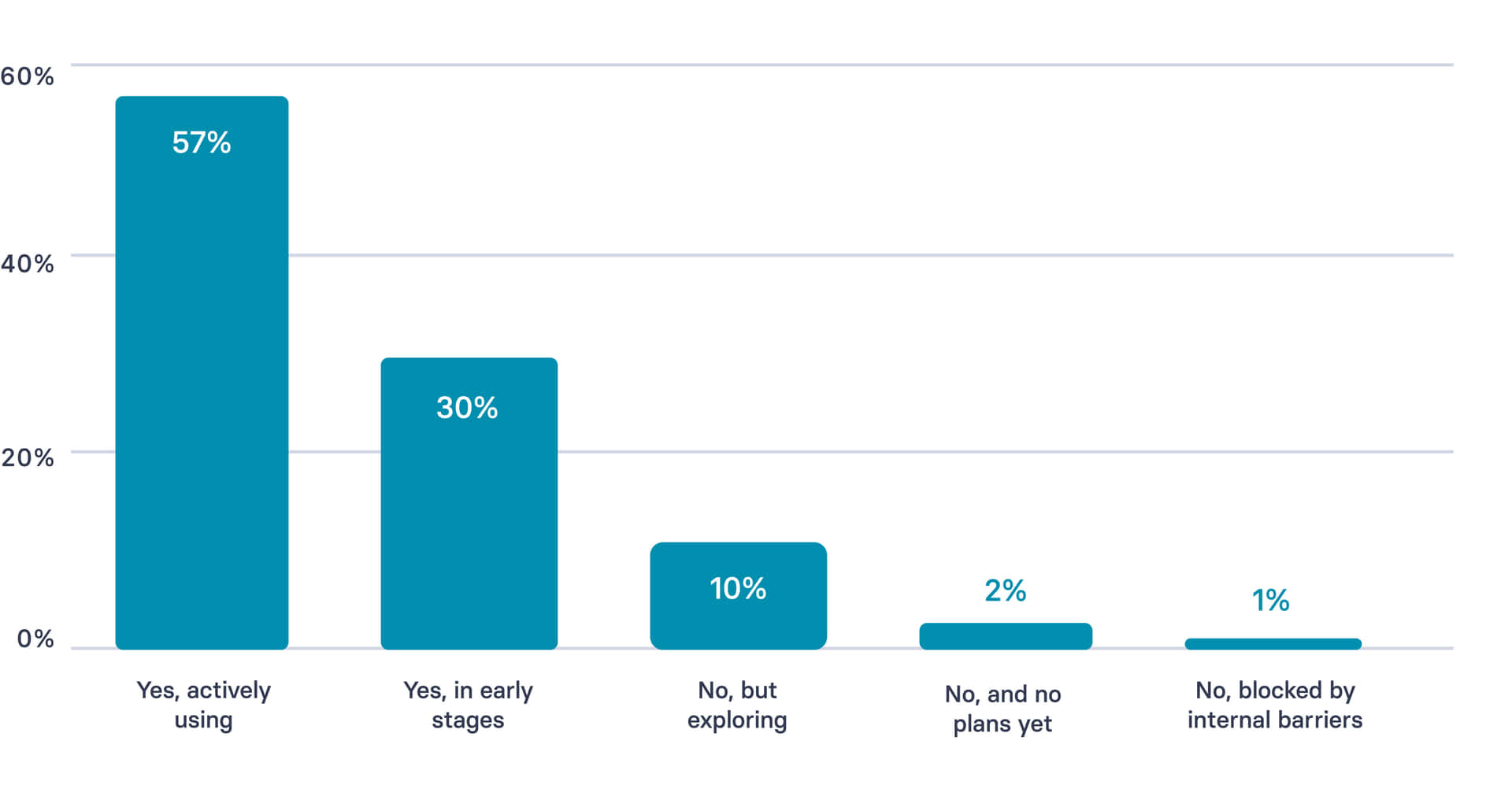

With 87% of L&D teams actively using AI, we've moved from "should we?" to "how fast can we scale?" According to the research, 57% of teams are actively using AI in production, while another 30% are running pilots. Compare that to last year, when 20% of organizations weren't using AI at all. Now only 2% have no adoption plans.

"Is your L&D team currently using AI tools in your Learning and Development programs?"

I've watched this shift happen firsthand. A year ago, teams would quietly test ChatGPT for course outlines. Most of the AI adoption was happening bottom-up and not aligned to a formal organizational strategy. Now those same teams have shared AI playbooks and team workflows.

The drivers are clear: cost and time savings, faster production, and efficiency.

A learning team inside a global pharmaceutical manufacturer began with zero AI capability and relied entirely on manual processes for objectives, scenarios, and assessments. Within six months, they built a documented library of prompts and workflows that standardized how AI supported every stage of their design process. The breakthrough came when they stopped treating AI as a content shortcut and rebuilt their workflows around clarity, templates, and quality controls. The shift cut development cycles in half and reduced rework because teams finally had a shared method for generating consistent outputs. This is critical to get right: the transformation didn't come from experimenting with tools but from institutionalizing repeatable AI practices that scaled across the organization.

While executives debate governance, teams already use AI to generate quiz questions (60%) and text-to-speech narration (63%) at scale. This bottom-up adoption changes how you approach AI strategy. It also shows up in admin work: teams lean on AI to draft reports, policies, and internal communications that used to eat entire afternoons.

What you should do now:

- Share these adoption statistics with executive sponsors to secure budget.

- Create a formal team AI playbook documenting current use cases and workflows.

- Set a 90-day scaling target to expand pilots into defined, team-level workflows.

From Faster Content To Smarter Learning: How AI Use Has Evolved

Early AI learning use cases centered on speed of content generation: creating quizzes, drafting scripts, translating content. Time saved still matters to 88% of teams. But it's no longer the only story.

Teams are moving to adaptive pathways, skills mapping, and AI tutors—solutions aimed at improving learner experience, not just speed.

A large consumer-services company was struggling with uneven capabilities across its customer-facing teams but lacked the bandwidth to create targeted training at the pace operations required. The shift happened when they moved from relying on text-based training documents to using AI to generate training videos, guides, scenarios, and refreshers tied directly to the skill gaps supervisors were seeing in real time. Managers could refine these AI videos in minutes, making continuous upskilling feasible without pulling people off the floor. Performance became more consistent across locations with no added headcount. This is critical to get right: the real leverage came from accelerating content creation to meet emerging gaps, not from sophisticated automation.

The next frontier is that teams now analyze needs with AI, design adaptive sequences, implement tutors, and evaluate with predictive analytics. While 88% value time savings today, 72% expect personalized learning to become the primary benefit.

What you should do now:

- Select two or three use cases beyond content production: assessments or simulations, adaptive pathways, or AI tutors.

- Create or surface evaluation rubrics that are designed around changing outcomes like reducing error rate or time-to-competency.

- Define success metrics upfront so you can prove value beyond time saved.

The L&D Professional's New Reality: Strategic Architect, Not Content Creator

The intent of modern L&D has shifted: the role is no longer to push out courses, but to architect workforce capability, aligning skills, systems, and communication to business priorities.

In practice, most teams still spend the bulk of their time on production tasks: fixing slides, drafting scripts, rewriting SME content, managing revisions, and handling endless admin that absorbs entire weeks.

This is the gap that matters: while the intent is strategic, the day-to-day reality is executional, reactive, and dominated by low-leverage work. AI now handles routine tasks like draft scripting, translation, and basic assessments. That frees L&D to focus on capability building and learning architecture.

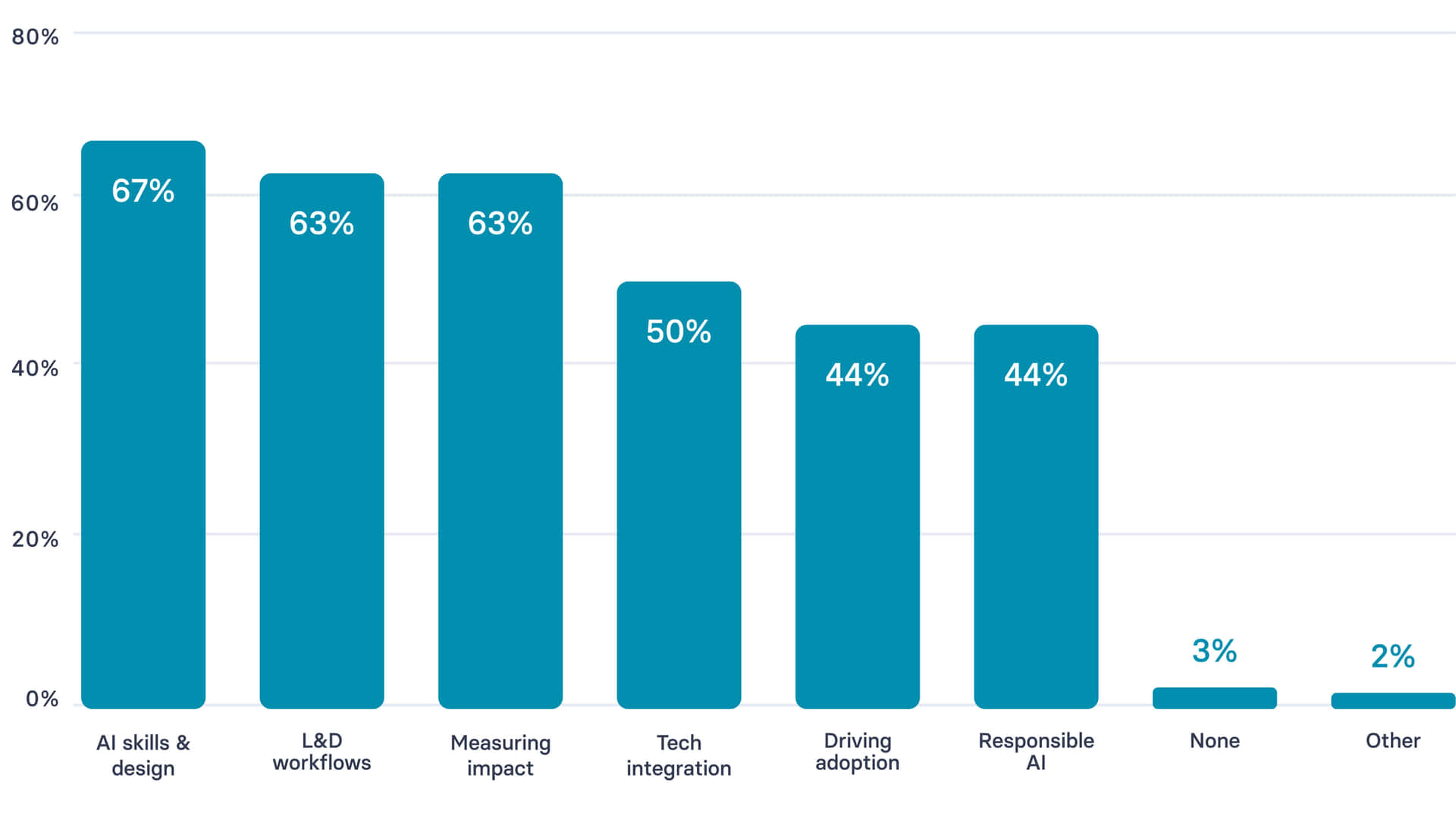

But it requires new skills. For example, 67% of L&D professionals want AI skills training for their teams, and 63% need guidance on integrating AI into workflows.

"What types of training or support would help your team use AI more effectively in L&D?"

Effective L&D teams are more like performance consultants, not course builders. They connect business strategy to workforce capability, spot skill gaps early, and measure impact in outcomes, not completions.

On the ground, AI also takes the admin load: drafting stakeholder updates, SOPs, and policy refreshes so the team can stay focused on design and change management.

Human judgment remains essential. AI can generate content, but people ensure it fits culture, context, and behavior change goals.

What you should do now:

- Map team roles to new skill needs: AI literacy, data fluency, ethical implementation, and systems thinking.

- Deliver at least five hours of role-specific AI training for each team member.

- Establish an internal AI community of practice to share prompts, workflows, and quality standards.

The Training Multiplier Effect: Your Secret Weapon For AI Adoption

AI adoption doesn't rise because teams get access to a tool; it rises because teams receive small, targeted bursts of training tied directly to their work. The pattern is consistent: when teams learn the basics, spread best practices (and workflows) and then immediately apply them to real projects, usage climbs.

In my experience, the training that works is lightweight, applied, and role-specific. Skip the generic prompts and share how specific prompts and workflows can improve the work that's already being done, within the systems teams already use.

What you should do now:

- Offer short, practical suggestions focused on real work and tasks.

- Build a small set of shared prompts and templates tied to core workflows.

- Track a simple before/after metric: "How long did this task take last month vs. this month with the new workflow?"

- Continually revisit these workflows.

Measure What Matters: Shift From Speed To Outcomes (Or New Behaviors)

Teams are moving from time saved (today's 88%) to business impact (55% expected) and personalization (72% expected), yet 63% need help measuring impact.

Most teams can quantify hours saved. Fewer can connect AI to outcomes. But if you start with your known information, you can start to make a case.

A training team I worked with in the communications sector reduced onboarding time, but we couldn't directly tie that reduction to business outcomes. Using a simple ROI model, we traced the reduction in ramp time back to hard numbers:

- New hire salary

- Cost of developing training content

- Supervision cost per new hire

- Expected ramp time for new hire

Onboarding dropped from 26 weeks to 7 after implementing new AI-enabled content and workflows.

This is critical to get right: business leaders don't care that onboarding is "faster." They care that faster onboarding reduces the cost of paying a new hire to be trained; that it frees up supervisor capacity, and accelerates time-to-productivity. Linking these outcomes to specific AI workflows is the real value.

Teams that succeed establish baselines, pick metrics business leaders care about, and report consistently.

What you should do now:

- Choose one program to instrument end-to-end.

- Choose a KPI that you could link AI inputs to business outcomes.

- Run a 90-day experiment and share results with stakeholders to start building your case.

The Agentic Future Is Already Here (And L&D Teams Are Building It)

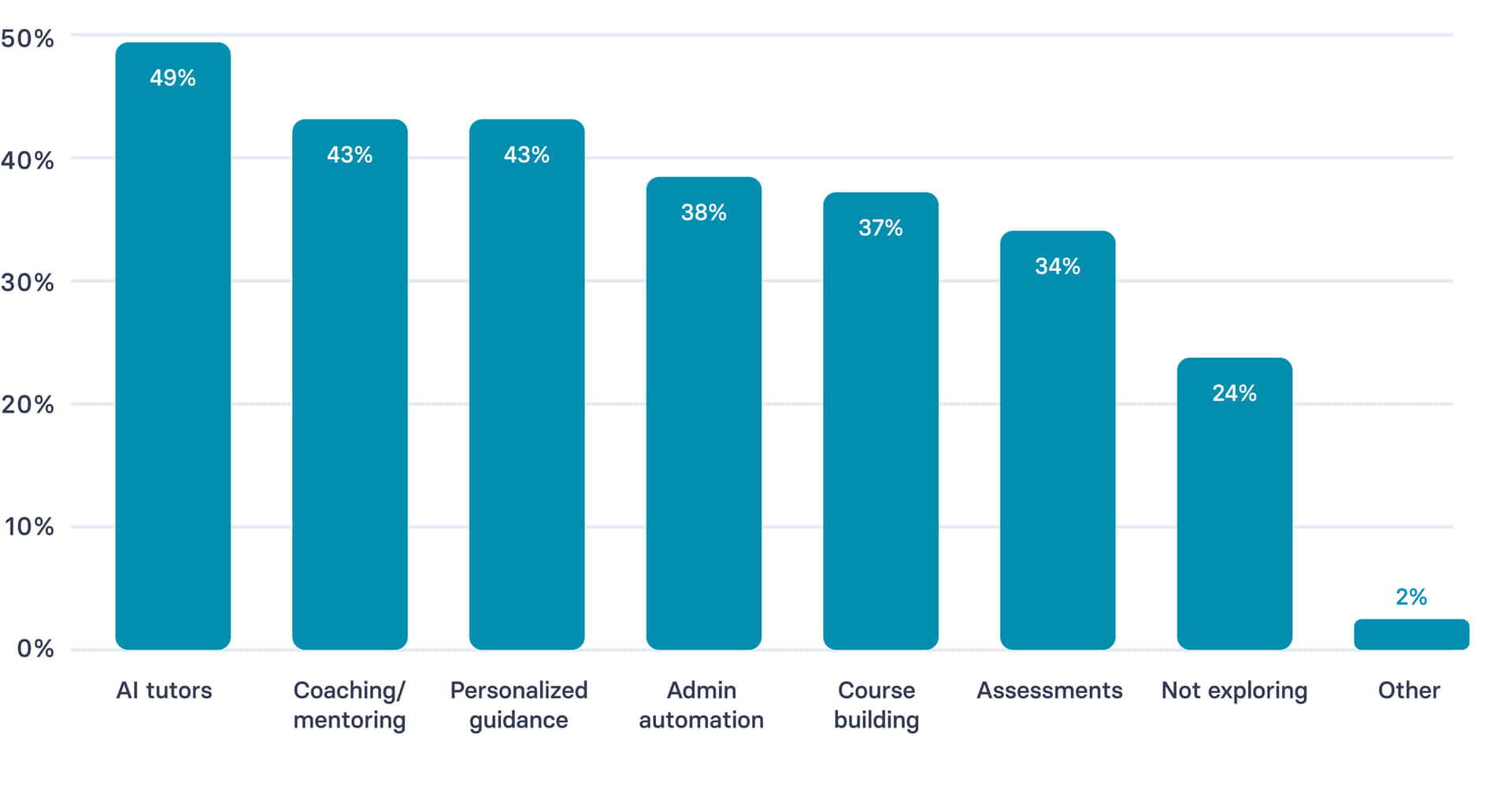

With 49% exploring AI tutors and 43% investigating AI-powered coaching, teams are building autonomous learning systems now.

"Which of the following agentic capabilities are you exploring in your L&D work?"

What Is Agentic AI?

Agentic AI, in L&D terms, refers to AI systems that take goal-driven actions: they guide learners, make decisions, and adapt interventions without constant human prompting.

Think of an AI tutor that detects a learner's struggle, adjusts difficulty, recommends resources, and schedules a follow-up assessment.

I'm seeing this in the field. An eCommerce furniture retailer uses an AI coach to analyze agent performance and coach to specific behaviors. A healthcare company built an AI FAQ agent that answers technical questions, suggests solutions, and routes complex queries to human experts.

Where should these agents live? 27% of L&D professionals don't know, and opinions split across LMS, productivity tools, standalone apps, and integration layers. Only 47% believe the LMS will remain the backbone.

Also, integration is hard. 50% of teams need technical support to connect AI tools to existing systems, particularly when questions are raised by an IT council.

What you should do now:

- Pilot one agentic use case in a low-risk area like onboarding or product knowledge.

- Design guardrails into agent instructions as a first or second step.

- Flag IT concerns early and often before proceeding with pilots.

Final Thoughts

The tipping point isn't coming. It's here.

AI is already reshaping L&D from the bottom up, and the teams that move fastest aren't the ones with the biggest budgets or the most mature strategies. They're the ones who focus on three things: building small but meaningful skills, rewriting workflows where AI creates real leverage, and measuring outcomes leaders actually care about.

This is critical to get right: the value of AI in L&D will not be determined by how many tools you deploy, but by how effectively you reduce friction in the work, accelerate capability building, and demonstrate impact in terms of performance, quality, or speed-to-productivity. The shift is already underway: from content production to capability design, from activity metrics to business outcomes, from experimentation to scalable practice.

The teams that win now are the ones that:

- Invest in lightweight, role-relevant AI skills—not large training programs.

- Build clear, repeatable workflows where AI handles the low-leverage work.

- Anchor every use case to a business metric that matters.

- Pilot small, instrumented experiments and scale only what proves value.

Start with one high-impact workflow. Prove the outcome. Expand deliberately.

That's how L&D moves from experimenting with AI to reshaping how the organization learns, performs, and adapts.