Blending Reasoning With Fast Learning

Neuro-symbolic Artificial Intelligence (NSAI) denotes a research paradigm and technological framework that synthesizes the capabilities of contemporary Machine Learning, most notably Deep Learning, with the representational and inferential strengths of symbolic AI. By integrating data-driven statistical learning with explicit knowledge structures and logical reasoning, NSAI seeks to overcome the limitations inherent in either approach when used in isolation.

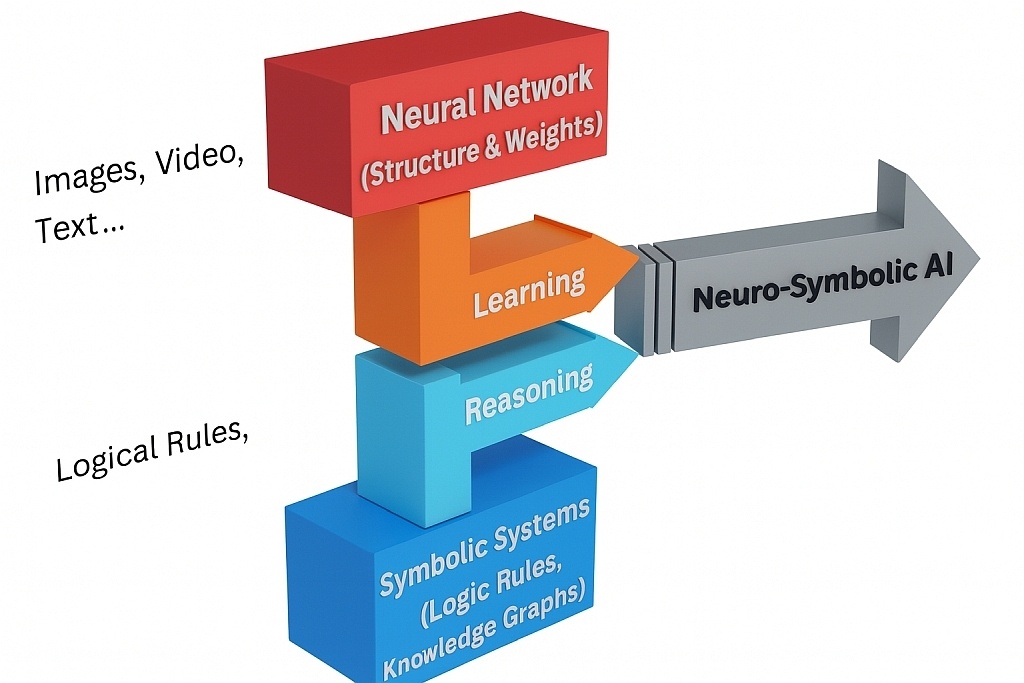

Symbolic: Logic, Ontologies. Neural Networks: Structure, Weights.

Within this paradigm, the term "symbolic" refers to computational methodologies grounded in the explicit encoding of knowledge through formal languages, logical predicates, ontologies, and rule-based systems. Such symbolic representations, ranging from mathematical expressions and logical assertions to programming constructs, enable machines to manipulate discrete symbols, enforce constraints, and derive conclusions via structured inference. Symbolic AI thus emphasizes the classification of entities and the articulation of their relationships within machine-readable knowledge frameworks that support transparent, logically grounded reasoning processes.

In purely sub-symbolic neural networks, information is captured implicitly through patterns of weighted connections that are gradually adjusted during training. These distributed representations allow the network to approximate desired outputs without relying on explicit, human-interpretable structures. Although such models excel at extracting correlations from unstructured data and offer remarkable scalability in dynamic, data-rich environments, their limitations have become increasingly evident. Sub-symbolic systems often struggle to generalize beyond their training distribution, particularly when confronted with novel or complex patterns. This can manifest in erroneous or fabricated outputs, commonly termed hallucinations, as well as uncontrolled biases and a persistent lack of transparent justification for the conclusions they generate.

The integration of the structured reasoning capabilities of symbolic systems (such as explicit relationships, constraints, and formal logic) with the pattern-learning strengths of neural networks forms the foundation of NSAI (illustrated in Figure 1). This hybrid prototype leverages both paradigms: neural models extract features from unstructured data (fast learning), while symbolic representations provide context, structure, and interpretability (reasoning).

Figure 1. NSAI: a symbiosis between Neural Networks and Symbolic Systems

An Application Domain And Taxonomy

In medical diagnostics, for example, a deep-learning classifier may detect visual patterns in an imaging scan and assign a probabilistic label for a particular disease, yet offer no rationale for its conclusion. By incorporating domain knowledge, such as ontologies of medical conditions, causal relationships between symptoms, and structured clinical guidelines, a neuro-symbolic system can contextualize the image features within a broader medical framework. Such enriched representation supports more accurate diagnostic reasoning, enables cross-referencing with patient histories and statistical health data, and ultimately yields predictions that are both more reliable and more explainable to clinicians.

Recent literature has introduced several taxonomies for neuro-symbolic AI. Here, we reference one specific taxonomy [1] , which organizes NSAI systems into three main categories:

- Learning for reasoning

Neural networks and Deep Learning models are used to extract symbolic knowledge from unstructured data, such as text, images, or video. The extracted knowledge is then integrated into symbolic reasoning or decision-making processes. - Reasoning for learning

Symbolic knowledge, such as logic rules, semantic structures, or domain ontologies, is incorporated into the training of neural models. The approach improves generalization, performance, and interpretability. In knowledge-transfer scenarios, symbolic information guides learning when adapting models across domains. - Learning–reasoning (bidirectional integration)

Neural and symbolic components interact continually. Neural networks generate hypotheses or predictions about relationships and rules, while the symbolic system performs logical reasoning on this information. The symbolic results are then fed back to the neural network, refining and improving the overall system's performance.

Past, Present, Future

Although the foundations of neuro-symbolic AI were laid decades ago, the field has gained remarkable momentum only in recent years, as demonstrated by a surge in scholarly work. Growing interest is driven by its potential in high-impact domains: in healthcare, NSAI can mine clinical literature and combine patient data with structured medical knowledge to support more informed reasoning; in robotics, it offers a pathway to more perceptive, adaptable, and autonomous systems by merging learned representations with explicit logic-based decision processes. Financial markets may also benefit from NSAI by improving credit risk prediction [2] through combining data-driven learning with structured financial knowledge.

Despite this progress, NSAI has yet to achieve substantial commercial adoption. Even in Natural Language Processing, an area with clear potential for symbolic integration, current systems remain largely neural and rarely incorporate explicit symbolic reasoning. A central challenge remains how to combine neural and symbolic components in ways that preserve the strengths of both. Achieving this requires new architectures and learning paradigms capable of unifying statistical pattern recognition with structured reasoning. Although significant advances exist, a broadly effective and scalable integration strategy has not yet been established.

Symbolic components also face efficiency limitations. Constructing logic rules and structured knowledge typically relies on labor-intensive, expert-driven processes. Neural networks are therefore often used to address tasks that are computationally prohibitive for purely symbolic systems. Automating rule extraction and developing more robust symbolic-representation learning methods represent important future research directions.

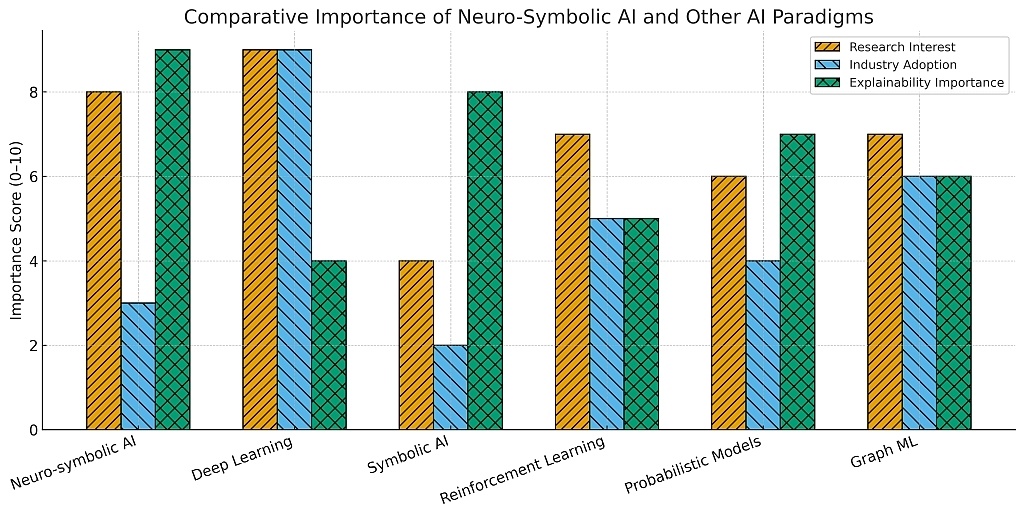

The future of NSAI is closely tied to developments in neural networks, whose capabilities and limitations both motivate and constrain NSAI approaches. Recent progress in Large Language Models (LLMs) is especially noteworthy, as these systems increasingly demonstrate proficiency in mathematical and logical tasks traditionally associated with symbolic AI. Figure 2 compares several major AI system categories, reflecting their current levels of industry adoption, research interest, and explainability (defined here as the extent to which a model's internal processes or outputs can be clearly understood).

Figure 2. Neuro-Symbolic AI vs. major AI system categories

Whether NSAI represents the next crucial paradigm in Artificial Intelligence remains an open debate. Of course, this discussion is intertwined with broader questions about how closely AI should mimic the human brain. Neural networks abstract biological structures, while symbolic systems reflect the explicit reasoning patterns humans articulate. Understanding how these two perspectives relate, and whether they can meaningfully complement one another, lies at the heart of NSAI's promise and its ongoing inquiry.

References:

[1] D. Yu, B. Yang, D. Liu, H. Wang, S. Pan. "A survey on neural-symbolic learning systems", in Neural Networks, Vol. 166, 2023, p. 105-126, ISSN 0893-6080, https://doi.org/10.1016/j.neunet.2023.06.028

[2] V. Dey, F. Hamza-Lup and I. E. Iacob. "Leveraging Top-Model Selection in Ensemble Neural Networks for Improved Credit Risk Prediction", 17 Intl. Conf. on Electronics, Computers and Artificial Intelligence (ECAI), Targoviste, Romania, pp. 1-7, https://doi.org/10.1109/ECAI65401.2025.11095568

Image Credits:

- The images within the body of the article were created/supplied by the author.

![eLearning Industry's Guest Author Article Showcase [December 2025]](https://cdn.elearningindustry.com/wp-content/uploads/2026/01/eLearning-Industrys-Guest-Author-Article-Showcase-December-2025.jpg)