How Client Expectations Stop L&D From Modernising

One of the most common phrases I hear from L&D professionals is: “We want to modernise and use more digital but our clients expect, and just ask for, training.” So, how do you change their minds to be able do something else – something more effective – instead? The short answer is: You may not be able to just do what you want (and quite rightly so), but you really should be more focused on the outcomes rather than the activities.

When a client asks you to run a training course for them, they are playing their part in a long-established conversation. For years, L&D had been asked for help and the stock response had been: “We could run a course for that!”. And not because it will guarantee improvement or enhancement but because the course has been our stock-in-trade for decades. For their part, our clients have been solutioneering on our behalf. Aren’t we all told in our formative working years: “Don’t come to me with problems, come to me with solutions…”

But in what other profession is the activity valued over the results? Certainly not in medicine. Even in Finance: Imagine clients approaching your finance director and asking to change the bottom line numbers on their department’s budget. The FD certainly wouldn’t comply without challenge. If they didn’t laugh the client out of their office, they may be calling for security.

For at least 15 years, L&D have wanted to become more than just order-takers. But if we’re going to continue to value activities over outcomes, or expect different outcomes with the same activities, then nothing will change.

Here are three things you can do to help influence your stakeholders and have them focus on doing the right things in pursuit of an outcome rather than simply the activities they are used to.

1. Run Small Experiments With Stakeholders Who Are ‘Ready’ To Try Something Else

Readiness need not mean just your ‘early adopters’, or those who would be up for trying ‘something new’, but those who really need your help. If you’ve got a stakeholder who wants email training, they don’t have a real problem for you to help solve. They have a small problem, at best, that they could sort out on their own. However, if you have a stakeholder whose team is missing critical KPIs and wants your help, you have something to work with. I’ve spoken before about real problems and perceived problems and how L&D too often gets involved in perceived problems, such as: “We don’t have a course for (x)”.

However, there are so many real problems waiting to be solved that you don’t need to go hunting for them for long. If you understand the goals and pain points of your key stakeholders, then these will be prominent in conversations. But here’s a clue anyway: These problems are core to what the organisation is trying to achieve.

When you have a stakeholder who really needs help in overcoming business performance problems, you often have license to do what it takes to enhance performance.

The only way you’ll modernise in a way that is meaningful and makes sense to your organisation and your stakeholders, is by running experiments. Work where there is a real business performance problem to address and an appetite to make things better.

What Are Experiments?

Well, let’s start with what experiments are not. Experiments are not pilots. We’re not talking about piloting a course to see what needs tweaking before we hit the masses. That’s just normal practice. No innovation, no modernisation. Just status quo.

Experiments begin with understanding the friction being experienced by your target group, and working with them on a ‘Minimum Valuable Product’ that will equip them to perform with more competence and confidence than they could before you got involved. Digital resources and conversations are the tools of an experiment, meaning they are repeatable experiences in pursuit of your target group’s goals. Once you’ve run a successful experiment, you can scale your solution to benefit more employees with the same, or comparable, problem.

As opposed to pilots, which are generally experienced once whilst everybody else, following the pilot, experiences a more refined one-and-done experience, experiments are run to solve the performance problem not the ‘learning problem’. To help make sense of this, let’s look at number two…

2. Focus On What Really Needs To Be Addressed

When L&D think in terms of programmes and content, there is always a solution that just needs tweaking before being launched upon an audience. Generic, silver-bullet solutions that are well-received but miss the mark. You know the ones: You’re still trying to prove the ROI on them several months (or years) after they were delivered.

Instead of making the link between ‘need’ and ‘training’, take some more time to understand the actual performance problem that is being experienced.

After years on the fringes of L&D, Performance Consulting is becoming more prominent as a way of both understanding what’s not working and also what needs to be done about it.

At its essence, performance consulting is a series of questions and a collaborative intent to solve real business performance problems with, and for, the client. Performance consulting gets to the heart of what is being experienced as a problem and brings both the practitioner (L&D) and the client a long way in recognising what needs to be done. Performance consulting helps you to understand four key things:

- What the presenting performance issue appears to be, by asking: What is actually being observed and experienced?

- Who is experiencing the performance issue, by asking: Who, specifically, needs to be doing something differently? This could be an individual, team or group of people.

- An understanding of how things are currently, by asking: What is happening now?

- A recognition, and articulation, of how things should be working, by asking: How do you want things to be?

An exploration of these questions is likely to highlight, to both you and your client, what the gap between the reality of now and the ideal is, as well as recognising the factors that need addressing to resolve the issues. You’ll recognise what are: Skill, Will, Structural, Environmental, ‘Political’, and Cultural factors, and help you lead the conversation towards experiments; i.e. what you can try in order to move the needle. Is there an area that is primed, and truly in need of some assistance? Do they recognise the problem? And are they all experiencing the same friction as they strive to achieve their goals?

You can only then progress by understanding the business performance problem from the perspective of the main actors, so work with them to refine the ‘goal’ (what they are trying to achieve in relation to the problem), and what friction they are experiencing in service of that goal. What is uncovered is what needs working on, and could be as simple as a process map and printed checklist. Or it could be a series of digital resources and regular conversations as you progress through defined milestones.

Whatever happens, your efforts will be addressing what is being experienced by those who are accountable for results, taking them on a journey from ‘not performing to expectations’ to ‘performing to expectations’, which is a compelling case for trying something based on outcomes over activity . But if your stakeholder wants cold, hard data…

3. Use Data To Make Informed Decisions

L&D departments today are exploring ways in which data can not only be used to ‘prove the effectiveness of a learning intervention’ but also to know whether they need to intervene at all. Stakeholders, and L&D, can be very quick to explore a ‘learning activity’ without truly understanding the extent, or context, of a particular problem.

Data can be used to identify whether there is a problem that is worthy of significant investment (of time, money, energy and attention), or whether it’s an irrelevant detail that is magnified by an aversion held by the stakeholder.

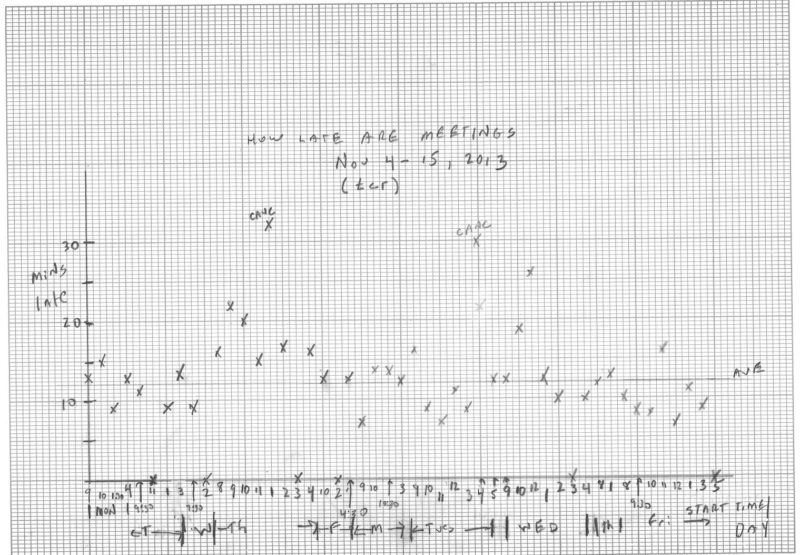

Imagine being told by a stakeholder that meetings at your organisation never start on time and that there should be some training to make people aware of meeting etiquette. On the surface, there will be anecdotal evidence that backs this up. We’ve all been to meetings that start late or that aren’t the most efficient use of company time. But does it really warrant the design and delivery of training – and would that really address the issues?

An article published in Harvard Business Review, titled ‘How to Start Thinking Like a Data Scientist’, is the best articulation that I’ve read, of the potential application of data in L&D. The following bullets summarise the steps recommended, much of it in the words of the author:

- Start With Your Problem Statement

In this case ‘meetings starting late’ and rewrite it as a question: “Meetings always seem to start late. Is that really true?”

- Brainstorm Ideas For Data That Could Help Answer Your Question And Develop A Plan For Gathering Them.

Write these down along with ways you could, potentially, collect the data. In this example, define when meetings ‘begin’. Is it the time someone says, “Ok, let’s begin.”? Or the time the real business of the meeting starts?

- Collect Your Data

And ensure you trust your data – although you’re almost certain to find gaps. For example, you may find that even though a meeting has started, it starts anew when a more senior person joins in. Modify your definition and protocol as you go along.

- As Soon As You Can, Start Drawing Some Pictures

“Good pictures make it easier for you to both understand the data and communicate main points to others.” The go-to picture of the author is a time-series plot, “where the horizontal axis has the date and time and the vertical axis has the variable of interest. Thus, a point on the graph below is the date and time of a meeting versus the number of minutes late.”

- Return To Your Initial Question And Develop Summary Statistics

Have you discovered an answer? i.e. “Over a two-week period, 10% of the meetings I attended started on time. And on average, they started 12 minutes late.”

- Answer The “So What?” Question

In this case, “If those two weeks are typical, I waste an hour a day. And that costs the company $X/year.” Many analyses end because there is no “so what?” Certainly, if 80% of meetings start within a few minutes of their scheduled start times, the answer to the original question is: “No, meetings start pretty much on time,” and there is no need to go further.

- If The Case Demands More, Get A Feel For Variation

“Understanding variation leads to a better feel for the overall problem, deeper insights, and novel ideas for improvement. Note on the picture that 8-20 minutes late is typical. A few meetings start right on time, others nearly a full 30 minutes late.”.

- Ask, “What Else Does The Data Reveal?”

From the example, five meetings began exactly on time, while every other meeting began at least seven minutes late. In this case, it was revealed that all five meetings were called by the Vice President of Finance. “Evidently, she starts all her meetings on time.”

- “So Where Do You Go From Here? Are There Important Next Steps?

This example illustrates a common dichotomy. On a personal level, results pass both the ‘interesting’ and ‘important’ test. Most of us would give anything to get back an hour a day. And you may not be able to make all meetings start on time, but if the VP can, you can certainly start the meetings you control promptly.”

- Are Your Results Typical?

Can others be as hard-nosed as the VP when it comes to starting meetings? “A deeper look is surely in order: Are your results consistent with others’ experiences in the company? Are some days worse than others? Which starts later: Conference calls or face-to-face meetings? Is there a relationship between meeting start time and most senior attendee? Return to step one, pose the next group of questions, and repeat the process. Keep the focus narrow; two or three questions at most.”

This approach can be applied to most of what L&D is accountable for, including:

- Induction

Is our company induction experience having a significant bearing on performance and engagement?

- New Managers

What is the consequence of leaving inexperienced and unsupported new managers in charge of teams?

- Project Management

Are projects not being delivered on time and on budget a problem?

Now this might seem like a lot of work up front but certainly takes less time than trying to find ROI that doesn’t exist on programmes delivered by request.

Data analysis is already one of the least developed skills in L&D and yet one of the most sought after skills in business, so it’s coming. But I believe it is accessible, if we apply the methodology above to understand both ‘should we work on this?’ and ‘did our intervention work?’ The alternatives are continuing to do what we’ve always done and chase ROI down another rabbit hole, as well as make ourselves irrelevant whilst the rest of the business world embraces data.

We needn’t concede power to stakeholders in the activities we undertake, but we must let outcomes dictate activities and not let activities remain as the outcome. When stakeholders request training they are either playing out a long-established routine, or they don’t really care about outcomes. Our role is to determine which it is and apply our attention and resources to business outcomes and spend as little as possible on activities that deliver little, if any, returns.

Invest in the upfront conversation to find out what the real problems are; how you may intervene to make a demonstrable difference; what moving the needle really looks like and will mean; and what data you can use at the very outset in order to modernise, add real value and grow as an L&D practitioner in a profession that is crying out for development in these areas.

We have to just stop this silliness of working on something because we’ve been asked to, or because it’s the latest thing in HR, and bolt ‘evaluation’ on the end to justify our existence. Investing up front in understanding what’s really going on and relying on data will be a huge leap for L&D.